01

期刊:Inform. Syst.

影响因子(IF):2.466

发表时间:2020-10-30

摘 要

Cloud storage allows organizations to store data at remote sites of service providers. Although cloud storage services offer numerous benefits, they also involve new risks and challenges with respect to data security and privacy aspects. To preserve confidentiality, data must be encrypted before outsourcing to the cloud. Although this approach protects the security and privacy aspects of data, it also impedes regular functionality such as executing queries and performing analytical computations. To address this concern, specific data encryption schemes (e.g., deterministic, random, homomorphic, order-preserving, etc.) can be adopted that still support the execution of different types of queries (e.g., equality search, full-text search, etc.) over encrypted data.

However, these specialized data encryption schemes have to be implemented and integrated in the application and their adoption introduces an extra layer of complexity in the application code. Moreover, as these schemes imply trade-offs between performance and security, storage efficiency, etc, making the appropriate trade-off is a challenging and non-trivial task. In addition, to support aggregate queries, User Defined Functions (UDF) have to be implemented directly in the database engine and these implementations are specific to each underlying data storage technology, which demands expert knowledge and in turn increases management complexity.

In this paper, we introduce CryptDICE, a distributed data protection system that (i) provides built-in support for a number of different data encryption schemes, made accessible via annotations that represent application-specific (search) requirements; (ii) supports making appropriate trade-offs and execution of these encryption decisions at diverse levels of data granularity; and (iii) integrates a lightweight service that performs dynamic deployment of User Defined Functions (UDF) –without performing any alteration directly in the database engine– for heterogeneous NoSQL databases in order to realize low-latency aggregate queries and also to avoid expensive data shuffling (from the cloud to an on-premise data center). We have validated CryptDICE in the context of a realistic industrial SaaS application and carried out an extensive functional validation, which shows the applicability of the middleware platform. In addition, our experimental evaluation efforts confirm that the performance overhead of CryptDICE is acceptable and validates the performance optimizations for achieving low-latency aggregate queries.

链接:

https://www.sciencedirect.com/science/article/abs/pii/S0306437920301289

02

期刊:J. Cloud Comp.

影响因子(IF):2.788

发表时间:2020-11-26

摘 要

Currently, rapidly developing digital technological innovations affect and change the integrated information management processes of all sectors. The high efficiency of these innovations has inevitably pushed the health sector into a digital transformation process to optimize the technologies and methodologies used to optimize healthcare management systems. In this transformation, the Internet of Things (IoT) technology plays an important role, which enables many devices to connect and work together. IoT allows systems to work together using sensors, connection methods, internet protocols, databases, cloud computing, and analytic as infrastructure. In this respect, it is necessary to establish the necessary technical infrastructure and a suitable environment for the development of smart hospitals. This study points out the optimization factors, challenges, available technologies, and opportunities, as well as the system architecture that come about by employing IoT technology in smart hospital environments. In order to do that, the required technical infrastructure is divided into five layers and the system infrastructure, constraints, and methods needed in each layer are specified, which also includes the smart hospital’s dimensions and extent of intelligent computing and real-time big data analytic. As a result of the study, the deficiencies that may arise in each layer for the smart hospital design model and the factors that should be taken into account to eliminate them are explained. It is expected to provide a road map to managers, system developers, and researchers interested in optimization of the design of the smart hospital system.

链接:

https://journalofcloudcomputing.springeropen.com/articles/10.1186/s13677-020-00215-5

03

期刊:Theor. Comput. Sci.

影响因子(IF): 0.747

发表时间:2020-10-15

摘 要

Recently, studying fundamental graph problems in the Massively Parallel Computation () framework, inspired by the MapReduce paradigm, has gained a lot of attention. An assumption common to a vast majority of approaches is to allow memory per machine, where n is the number of nodes in the graph and hides polylogarithmic factors. However, as pointed out by Karloff et al. [SODA'10] and Czumaj et al. [STOC'18], it might be unrealistic for a single machine to have linear or only slightly sublinear memory.

In this paper, we thus study a more practical variant of the model which only requires substantially sublinear or even subpolynomial memory per machine. In contrast to the linear-memory model and also to streaming algorithms, in this low-memory setting, a single machine will only see a small number of nodes in the graph. We introduce a new and strikingly simple technique to cope with this imposed locality.

In particular, we show that the Maximal Independent Set () problem can be solved efficiently, that is, in rounds, when the input graph is a tree. This constitutes an almost exponential speed-up over the low-memory algorithm in rounds in a concurrent work by Ghaffari and Uitto [SODA'19] and substantially reduces the local memory from required by the recent -round algorithm of Ghaffari et al. [PODC'18] to for any , without incurring a significant loss in the round complexity. Moreover, it demonstrates how to make use of the all-to-all communication in the MPC model to almost exponentially improve on the corresponding bound in the and models by Lenzen and Wattenhofer [PODC'11].

【以下是专属计算机福利】

链接:

https://www.sciencedirect.com/science/article/pii/S0304397520305788

04

期刊:J. Comput. Syst. Sci

影响因子(IF):1.494

发表时间:2020-11-19

摘 要

In k-Clustering we are given a multiset of n vectors X⊂Zd and a nonnegative number D, and we need to decide whether X can be partitioned into k clusters C1,…,Ck such that the cost

∑i=1kminci∈Rd∑x∈Ci‖x−ci‖pp≤D,

where ‖⋅‖p is the Lp-norm. For p=2, k-Clustering is k-Means. We study k-Clustering from the perspective of parameterized complexity. The problem is known to be NP-hard for k=2 and also for d=2. It is a long-standing open question, whether the problem is fixed-parameter tractable (FPT) for the combined parameter d+k. In this paper, we focus on the parameterization by D. We complement the known negative results by showing that for p=0 and p=∞, k-Clustering is W[1]-hard when parameterized by D. Interestingly, we discover a tractability island of k-Clustering: for every p∈(0,1], k-Clustering is solvable in time 2O(DlogD)(nd)O(1).

链接:

https://www.sciencedirect.com/science/article/pii/S0022000020300982

05

期刊:Int. J. Comput. Sci. Eng.

影响因子(IF):2.644

发表时间:2020-11-26

摘 要

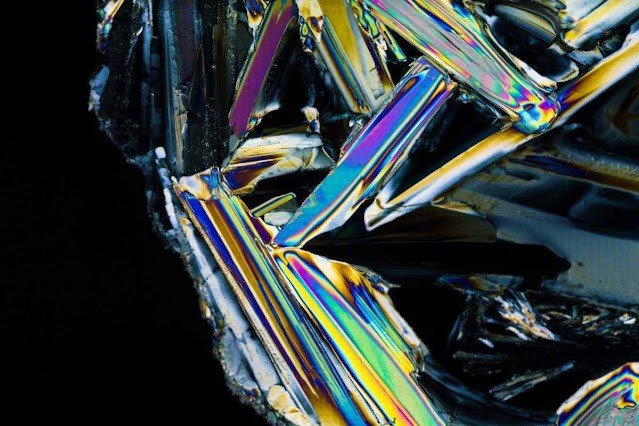

The present paper develops a fast method to simulate the solidification structure of continuous billets with Cellular Automaton (CA) model. Traditional solution of the CA model on single CPU takes a long time for the massive datasets and complicated calculations, making it unrealistic to optimize the parameters through numerical simulation. In this paper, a parallel method based on Graphics Processing Units (GPU) was proposed to accelerate the calculation, which developed new algorithms for the solute redistribution and neighbor capture to avoid data race in parallel computing. This new method was applied to simulate the solidification structure of Fe-0.64C alloy, and the simulating results were in good agreement with the experiment results with the same parameters. The absolute computational time for the fast method implemented on Tesla P100 GPU is 277 s, while the traditional method implemented on Intel(R) Xeon(R) CPU E5-2680 v4 @ 2.40 GHz with single core is 24.57 h. The speedup, ratio between the absolute computational time of GPU-CA and CPU-CA, varies from 300 to 400 with the increase of the grids.

链接:

https://www.sciencedirect.com/science/article/abs/pii/S1877750320305639

更多期刊内容推荐

上一篇

上一篇